An Eye for an Item

Features

Automatic image collection and blurry image filtering, teleoperation through ssh or bluetooth, 2D localization from differential steering model, 2D odometry drift correction via Apriltag detections, support for text and image queries for lost objects, location stamping of lost object images.

Tools & Technologies

ROS2, Python, C++, Docker, NVIDIA AGX Orin, ESP32, NanoDB

Challenge

This project details the process of building a robot to find objects in its indoor environment, based on text or image queries supplied by you.

The motivation behind this project is to create an affordable robot that can catalog objects in any general, unstructured indoor environment. This can be useful from small scale applications like finding lost objects in your living room, all the way to large scale applications like inventory tracking in large warehouses.

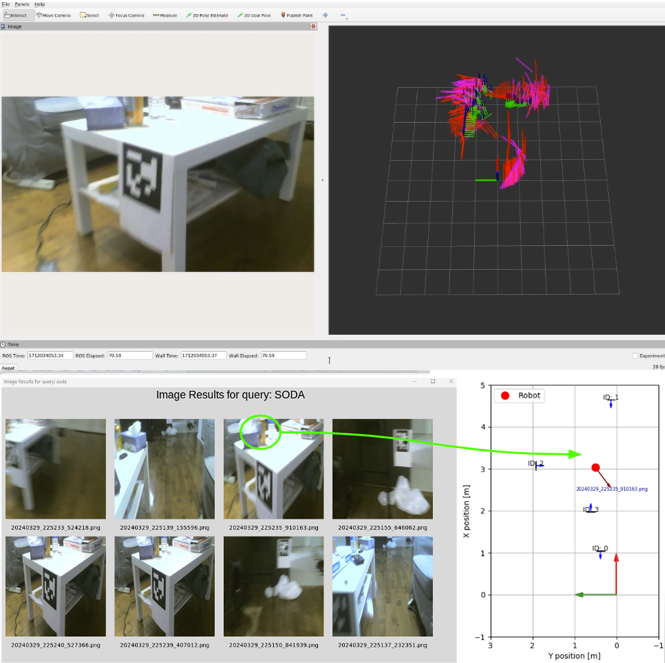

At a high level, the robot drives around its environment, collecting images through its webcam. It's 2D pose (x, y, and yaw) is also computed by fusing odometry results from the differential steering model, and Apriltag detections set up at known locations around the room.

After collecting these images, the user can then ask the robot if it has seen an object, and where it as seen it, through supplying text or image queries of the object in question. The robot handles each query by displaying the top candidates matching the query to the user, as well as the location where the image was taken, visualized on a map of the environment its in.

All computation is done onboard the Jetson AGX Orin on the robot.