Self-Driving Car 3D Perception

Features

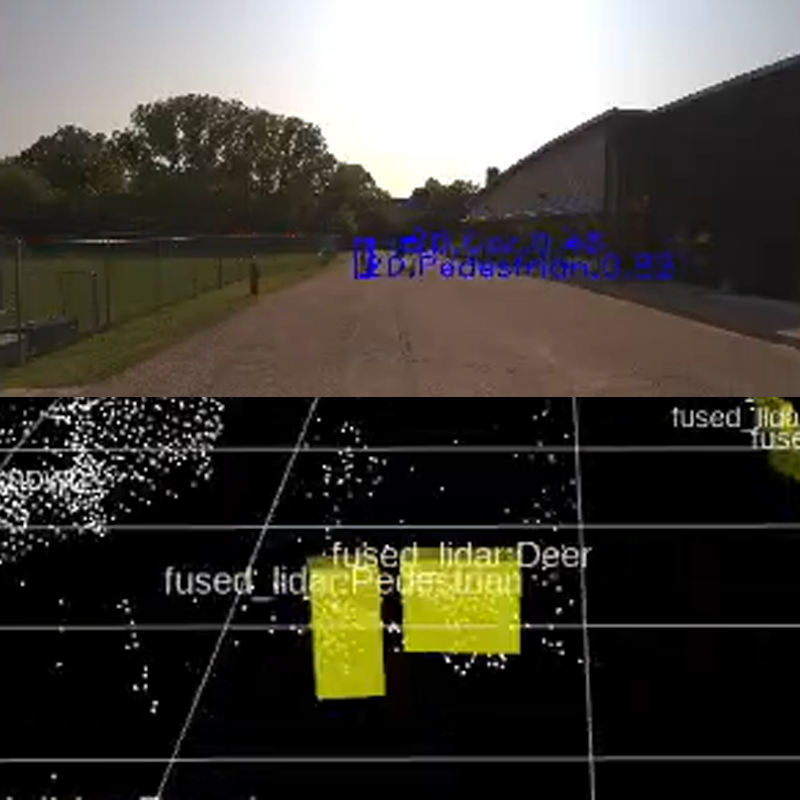

Using LiDAR to detect the 3D pose of relevant traffic objects (cars, pedestrians, signs, barrels, barricades, deers, railroad bars, etc).

Tools & Technologies

ROS2, C++, Python, Cepton LiDAR

Challenge

As part of Round 2 Year 3 of the SAE Autodrive Challenge, self-driving cars must be able to detect common traffic objects to inform downstream motion planners and controllers to navigate various dynamic scnearios.

Currently, I am leading the 3D perception team. Our 3D perception module is responsible for detecting the 6D pose (x, y, z, roll, pitch, yaw) of all relevant traffic objects (cars, pedestrians, signs, barrels, barricades, deers, railroad bars etc). This is computed from the Cepton solid-state LiDARs' pointclouds. I am currently experimenting with both classical and deep learning approaches to achieve the best detection results, while maintaining low compute requirements to run the pipeline at 10 Hz for real-time operation.